By Amy Castor and David Gerard

- We need your support for more posts like this. Send us money! Here’s Amy’s Patreon, and here’s David’s. Sign up today!

- If you liked this post, please tell one other person. Thank you!

Sam and Ilya, back in the happier days of June 2023

Sam Altman, the CEO of OpenAI, was unexpectedly fired by the board on Friday afternoon. CTO Mira Murati is filling in as interim CEO. [Press release, archive]

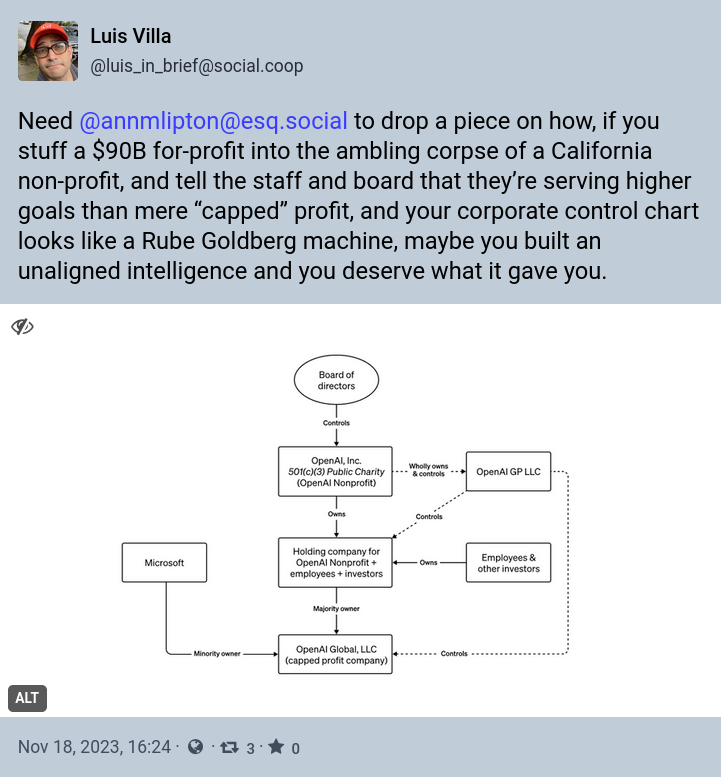

OpenAI is a nonprofit with a commercial arm. (This is a common arrangement when a nonprofit finds it’s making too much money. Mozilla is set up similarly.) The nonprofit controls the commercial company — and they just exercised that control. [OpenAI]

Microsoft invested $13 billion to take ownership of 49% of the OpenAI for-profit — but not of the OpenAI nonprofit. Microsoft found out Altman was being fired one minute before the board put out its press release, half an hour before the stock market closed on Friday. MSFT stock dropped 2% immediately. [Axios]

This board decision just cost Microsoft billions. We wonder if they’ll sue.

So what caused this?

The board wasn’t specific, but their statement reads like fighting words:

he was not consistently candid in his communications with the board, hindering its ability to exercise its responsibilities. The board no longer has confidence in his ability to continue leading OpenAI.

You just don’t call your CEO a liar in the press release! Even CEOs who get fired for malfeasance, sexual misconduct, or lying their asses off to their board are let go with polite fake smiles!

The news came out of nowhere for almost everyone. OpenAI cofounder Greg Brockman was kicked off the board as well and quit the company immediately. Multiple OpenAI researchers have also quit their jobs. [Twitter, archive; Twitter, archive]

OpenAI executive Brad Lightcap told employees on Saturday morning that “the board’s decision was not made in response to malfeasance or anything related to our financial, business, safety or security/privacy practices. This was a breakdown in communication between Sam and the board.” [Axios]

The world is presuming that there’s something absolutely awful about Altman just waiting to come out. But we suspect the reason for the firing is much simpler: the AI doom cultists kicked Altman out for not being enough of a cultist.

Here’s Wired on OpenAI from September 2023: [Wired, archive]

It’s not fair to call OpenAI a cult, but when I asked several of the company’s top brass if someone could comfortably work there if they didn’t believe AGI [artificial general intelligence] was truly coming — and that its arrival would mark one of the greatest moments in human history — most executives didn’t think so. Why would a nonbeliever want to work here? they wondered.

What if it was fair to call OpenAI a cult, though?

Until Friday, OpenAI had a board of only six people: Greg Brockman (chairman and president), Ilya Sutskever (chief scientist), and Sam Altman (CEO), and outside members Adam D’Angelo, Tasha McCauley, and Helen Toner.

Sutskever, the researcher who got OpenAI going in 2015, is deep into “AI safety” in the sense of Eliezer Yudkowsky. Toner and McCauley are Effective Altruists — that is to say, part of the same cult.

Eliezer Yudkowsky founded a philosophy he called “rationality” — which bears little relation to any other philosophy of such a name in history. He founded the site LessWrong to promote his ideas. He also named “Effective Altruism,” on the assumption that the most effective altruism in the world was to give him money to stop a rogue superintelligent AI from turning everyone into paperclips.

The “ethical AI” side of OpenAI are Yudkowsky believers, including Mira Murati, the CTO who is now CEO. They are AI doomsday cultists who say they don’t think ChatGPT will take over the world — but behave like they do think that. [OpenAI]

D’Angelo doesn’t appear to be an AI doomer — but presumably Sutskever convinced him to kick Altman out anyway.

Yudkowsky endorsed Murati’s promotion to CEO: “I’m tentatively 8.5% more cheerful about OpenAI going forward.” [Twitter, archive]

We’ve written before about how everything in machine learning is hand-tweaked and how so much of what OpenAI does relies on underpaid workers in Africa and elsewhere. This stuff doesn’t yet work as any sort of product without a hidden workforce of humans behind it, pushing. The GPT series are just powerful autocomplete systems. They aren’t going to turn you into paperclips.

Sam Altman was an AI doomer — just not as much as the others. The real problem was that he was making promises that OpenAI could not deliver on. The GPT series was running out of steam. Altman was out and about in the quest for yet more funding for the OpenAI company in ways that upset the true believers.

A boardroom coup by the rationalist cultists is quite plausible, as well as being very funny. Rationalists’ chronic inability to talk like regular humans may even explain the statement calling Altman a liar. It’s standard for rationalists to call people who don’t buy their pitch liars.

So what from normal people would be an accusation of corporate war crimes is, from rationalists, just how they talk about the outgroup of non-rationalists. They assume non-believers are evil.

It is important to remember that Yudkowsky’s ideas are dumb and wrong, he has zero technological experience, and he has never built a single thing, ever. He’s an ideas guy, and his ideas are bad. OpenAI’s future is absolutely going to be wild.

There are many things to loathe Sam Altman for — but not being enough of a cultist probably isn’t one of them.

We think more comedy gold will be falling out over the next week.

In the meantime, Worldcoin, Altman’s eyeballs-on-the-blockchain token, dropped 10% on news of his firing from OpenAI. [TechCrunch]

Update: Venture capitalists who depend on OpenAI are trying to get Microsoft to strong-arm the OpenAI board into taking Altman back as CEO. Failing that, they’ll try to fund Altman and Brockman to start a new company they can hook their startups to. [Forbes]

Update 2: Microsoft has hired Altman and Brockman to head up their AI efforts. OpenAI has hired Emmett Shear, former CEO of Twitch, as the new interim CEO of OpenAI. [CNN]

Your subscriptions keep this site going. Sign up today!

“[T]entatively 8.5% more cheerful” 97% has to be deliberately tongue-in-cheek. AGI might never achieve true self-awareness, but Eliezer Yudkowsky tentatively has.

I found this useful:

https://daringfireball.net/2023/11/more_altman_openai

“A simple way to look at it is to read OpenAI’s charter, “the principles we use to execute on OpenAI’s mission”. It’s a mere 423 words, and very plainly written. It doesn’t sound anything at all like the company Altman has been running. The board, it appears, believes in the charter. How in the world it took them until now to realize Altman was leading OpenAI in directions completely contrary to their charter is beyond me.”

OpenAI, creators of a system notorious for spewing misinformation and inaccuracies, fires Altman for misinformation and inaccuracies.

Talk about a lack of self-awareness…

Looks like the remaining OpenAI board are about to get a lesson in the truth of the saying ‘he who pays the piper calls the tune’.

Hang on, I should be on the side of Sam Altman? I again state clearly: David and Amy always know more terrible nonsense about the world than I do and it always makes me regret having found out.

Heartwarming: The Worst People You Know etc

Everyone needs to get over the idea that there are “two” sides to every conflict. Sometimes everyone involved is in the wrong. And this seems to be one of those times.

we just hope they all have a nice time!

I was wondering what was going on, and this sounds stupid enough to be true.

I just want to know who Ilya Sutskever’s tailor is. . . so I can avoid them.

Sam, you made the pants too short and the coat too small. . .

This was a great little read. So nice to get the views of someone who is has a deep background it this and can explain it simple and clearly

The way that AI rationalists are described as using accusations of lying echoes the practice of Randian objectivists, for whom the greatest sin is “evasion” — meaning, in effect, the failure to come around to Ayn Rand or Leonard Peikoff’s view on a matter.