- Amy is still busy, so David wrote this one up. Expect proper spelling and eschewing the AP style guide.

- We need your support for more posts like this. Send us money! Here’s Amy’s Patreon, and here’s David’s. Sign up today!

- If you like this post — please tell just one other person.

“GPT5 has averaged photos of all website owners and created a platonic ideal of a sysadmin: [insert photo of Parked Domain Girl]”

— Jookia

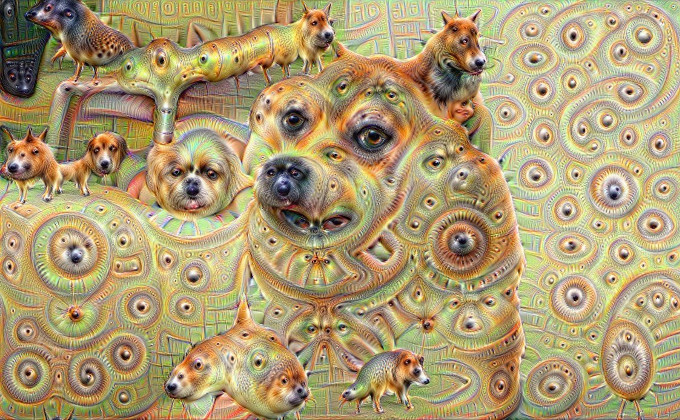

Deep Dream output, 2015 — a Biblically accurate doge. Switch the site to dark mode for best results.

Lucy in the skAI with diamonds

A vision came to us in a dream — and certainly not from any nameable person — on the current state of the venture capital-fueled AI and machine learning industry. We asked around and several others who work in the field concurred with this assessment.

Generative AI is famous for “hallucinating” made-up answers with wrong facts. These are crippling to the credibility of AI-driven products.

The bad news is that the hallucinations are not decreasing. In fact, the hallucinations are getting worse.

Large language models work by generating output based on what tokens statistically follow from other tokens. They are extremely capable autocompletes.

All output from a LLM is a “hallucination” — generated from the latent space between the training data. LLMs are machines for generating convincing-sounding nonsense — “facts” are not a type of data in LLMs.

But if your input contains mostly facts, then the output has a better chance of not being just nonsense.

Unfortunately, the venture-capital-funded AI industry runs on the promise of replacing humans with a very large shell script — including in areas where details matter. If the AI’s output is just plausible nonsense, that’s a problem. So the hallucination issue is causing a slight panic among AI company leadership.

More unfortunately, the AI industry has run out of training data that isn’t tainted with previous AI output. So they’re seriously considering doing the stupidest thing possible: training AIs on the output of other AIs. This is already well known to make the models collapse into gibberish. [WSJ, archive]

AI companies are starting to talk up “emergent capabilities” again — where an AI suddenly becomes useful for things it wasn’t developed for, like translating languages it wasn’t trained on. You know — magic.

Every claim ever made of “emergent capabilities” has turned out to be an irreproducible coincidence or data that the model was in fact trained on. Magic doesn’t happen. [Stanford, 2023]

The current workaround in AI is to hire fresh master’s graduates or PhDs to try to fix the hallucinations. The companies try to underpay the fresh grads on the promise of future wealth — or at least a high-status position in the AI doomsday cult. OpenAI is notoriously high on the cultish workplace scale, for example, not understanding why anyone would want to work there if they weren’t true believers.

If you have a degree with machine learning in it, gouge them for every penny you can while the gouging is good.

Remember when AI had proper hallucinations? Eyeballs! Spurious dogs! [Fast Company, 2015]

When the money runs out

There is enough money floating around in tech VC to fuel the current AI hype for another couple of years. There are hundreds of billions of dollars — family offices, pension funds, sovereign wealth funds — that are desperate to find returns.

But in AI in particular, the money and the patience are running out — because the systems don’t have a path to profitable functionality.

Stability AI raised $100 million at a $1 billion valuation. By October 2023, they had $4 million cash left — and couldn’t get more because their investors were no longer interested in setting money on fire. At one stage, Stability ran out of money for their AWS cloud computing bill. [Forbes, archive]

Ed Zitron gives the present AI venture capital bubble three more quarters (nine months), which would take it through to the end of the year. The gossip concurs with Ed on this likely lasting another three quarters. There should be at least one more wave of massive overhiring. [Ed Zitron]

Compare AI to bitcoin, which keeps coming back like a bad Ponzi. It’s true, as Keynes says, that the market can stay irrational longer than you can stay solvent. Crypto is a pretty good counterexample to the efficient market hypothesis. But AI doesn’t have the Ponzi-like structure of crypto — there’s no path to getting rich for free for the common ex-crypto-degen that would sustain it that far beyond all reason.

AI stocks are what’s holding up the S&P 500 this year. This means that when the AI VC bubble pops, tech will crash.

Whenever the NASDAQ catches a cold, bitcoin catches COVID — so we should expect crypto to go through the floor in turn.

AI will soon be doing reasoning! Well, ‘reasoning’

Financial Times headline, Thursday 11 April: “OpenAI and Meta ready new AI models capable of ‘reasoning’”. Huge if true! [FT, archive]

This is an awesome story that the FT somehow ran without an editor reading it from the beginning through to the end. You can watch as the splashy headline claim slowly decays to nothing:

- Headline: AI models capable of ‘reasoning’ are nearly ready. According to the subheading, they’ll come out this year!

- First paragraph: well, they’re not ready as such, but OpenAI and Facebook are “on the brink” of releasing a reasoning engine — trust us, bro.

- Fourth paragraph: the companies haven’t actually figured out yet how to do reasoning. But “We are hard at work in figuring out how to get these models not just to talk, but actually to reason, to plan … to have memory.” Now, you might think they’ve been claiming to be hard at work on all of these for the past several years.

- Fifth paragraph: it’ll totally “show progress,” guys. It’s “just starting to scratch the surface on the ability that these models have to reason” — that is, the models don’t actually do this.

- Sixth paragraph: current systems are still “pretty narrow” — that is, the models don’t actually do this.

- Thirteenth paragraph, halfway down: Yann LeCun of Facebook admits that reasoning is a “big missing piece” — not only do the models not do it, the companies don’t know how to do it.

- Fourteenth paragraph: AI will one day give us such hitherto-unknown applications as journey planners.

- Seventeenth paragraph: “I think over time … we’ll see the models go toward longer, kind of more complex tasks,” says Brad Lightcap of OpenAI.

In the final paragraph, LeCun warns us:

“We will be talking to these AI assistants all the time,” LeCun said. “Our entire digital diet will be mediated by AI systems.”

It’s hard to see that other than as a threat.

His lips are moving

Lie detectors don’t exist, but there’s no sucker like a rich sucker. Speech Craft Analytics managed to get a promotional flyer printed in the FT for its purported voice stress analysers.

Voice stress analysis is complete and utter pseudoscience. It doesn’t exist. It doesn’t work. Fabulous results are regularly claimed and never reproduced. Anyone trying to sell you voice stress analysis is a crook and a con man.

But lie detectors are such a desperately desired product that merely being a known fraud won’t stop anyone from buying them.

The FT story markets this to professional investors and securities analysts — they claim that they can tell when a CEO is lying in an earnings call.

How do they do this? It’s uh, AI! Totally not handwaving, magic or pseudoscience.

The use case they don’t name but obviously imply is, of course, to use this nigh-magical gadget on your own employees — whether it works or not. The article even admits that this sort of claimed AI use case risks becoming an engine for racism laundering. [FT, archive]

But consider: everyone involved is in dire need of leashing

For anyone who doubts that AI is precisely the same rubbish as blockchain, we present to you: the AI UNLEASHED SUMMIT! [AI Unleashed Summit, archive]

This amazing event ran in September 2023. The “Exponential Ai strategies” were promises to teach you how to do prompt engineering.

“Experience the ultimate skill mastery like being plugged into a Matrix-like machine!” — someone who’s totally watched The Matrix.

The promotional trailer video looks like a parody because it appears largely to be stock footage. [YouTube]

Impromptu engineering

A card game developer told PC Gamer how it paid an “AI artist” $90,000 to generate card art — because “no one comes close to the quality he delivers.” The “artist,” who totally exists, isn’t on social media. The cards are generic AI ripoffs of well-known collectible card games but with extra fingers and misplaced limbs and tails. It turns out PC Gamer was tricked into running a promotional story on an NFT offering — in 2024. [PC Gamer, archive]

Where is GPT-5 getting fresh training data? It looks like it’ll be getting a lot of valuable new authentic human interactions from honeypot sites designed to waste the time of spammy web crawlers. [NANOG]

Baldur Bjarnason: “Tech punditry keeps harping on the notion that nobody has ever successfully banned ‘scientific progress’, but LLMs and generative models are not ‘progress’. They’re products and we ban those all the time.” [blog post]

Your subscriptions keep this site going. Sign up today!

> This is an awesome story that the FT somehow ran without an

> editor reading it from the beginning through to the end.

Isn’t this how all journalism is done these days? Most of the editors have been fired, replaced by autonomous systems that do little more than spell check. Now of course, AI is going to replace that….Except, they’re not, as you point out.

The FT is a paper with money, though!

Just last week Matt Asay, who is a fairly well-known columnist not devoid of technical credibility, published an article in InfoWorld that appears to have been little more than barely-rehashed text from a cease-and-desist sent by a major vendor to an open-source project they accused of infringing their license. Even more interestingly the publication timestamp is the morning of the day the C&D is dated. Strategic advance leaks of legal filings are nothing new but Asay appears to have not even asked the project for comment before publishing.

A thing about AI hallucinations: humans have them too. Ever see a human expert confidently assert something as fact and then not be able to back the opinion up with any evidence at all? Human fact hallucination. AI fact hallucinations are not something that is ever going to go away.

Just stumbled across this post and realised you’re the “50 foot blockchain” guy”. I read and enjoyed that a while ago, so I’ve just bought your next book. Don’t let me down 🙂

David, love your writing. Magic *does* happen, just look at Tether lol

Snark aside, how would you define magic, David? Isn’t magic just an advanced form of creativity? Where wanted outcomes are achieved quickly and effortlessly by skillful “magic” users? Based on this definition, I would say that magic does exist. Just not in the cryptoworld.