“Effective Altruism” was the favourite charitable cause of Sam Bankman-Fried and Caroline Ellison of FTX and Alameda. Charity’s good, right? Maybe.

Effective Altruism is when you spend the charity money on buying Wytham Abbey. Photo by Dave Price, CC by-sa 2.0.

What is Effective Altruism?

Effective Altruism is a subculture that wants to quantify charity for maximum effectiveness. EA did not invent the concept of quantifying charity, though they tend to try to claim credit whenever the notion comes up. If charity’s going to exist, who wouldn’t want it to be effective?

EA as we know it started from the ideas of Australian philosopher Peter Singer. His 1972 paper “Famine, Affluence and Morality” sets out his ideas on how to make the world better — that you should help people elsewhere in the world just as you help people nearer to you. [PDF]

This isn’t a bad idea — if you’re reading this, you’re likely much richer compared to most of the world than you think of yourself as being, and should probably put in more effort than you do. And keeping track of how you’re doing is reasonable. There are a lot of good people in EA, who are sincerely working their backsides off trying to make the world a better place.

A number of quantified philanthropy groups were inspired by Singer’s ideas in the early 2000s and came together in the early 2010s to form the “Effective Altruism” movement as we know it. [Washington Post]

There’s a lot of good people in EA, and a lot of terrible ones. For instance, the increasingly well-documented and frankly cult-like abuses inside EA, dominated as it is by rich white men in tech. Who’d have thought a subculture that consciously constructed itself around making rich men feel good about themselves would treat women as commodities? [Time]

The subculture is also just a little too tolerant of weird scientific racists and eugenicists, as long as they have money or are old friends from the mailing lists. [Jacobin; Salon; expo.se]

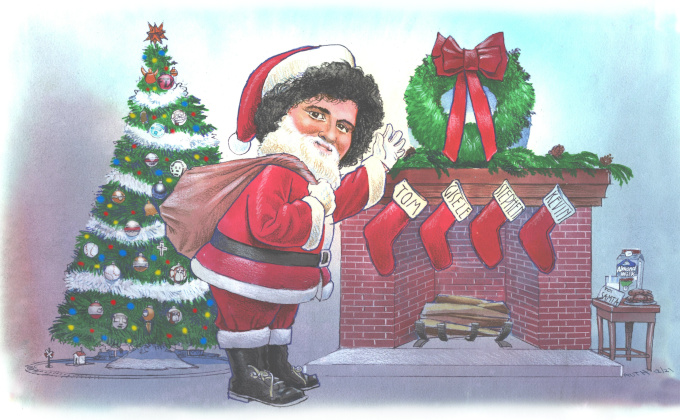

There’s also the bit where EA movement leaders spent the FTX money on a castle or two. But if you do the numbers, you’ll understand that the most effective possible action is to fit out a really nice castle where movement leaders can sit and contemplate the future robot apocalypse. [Truthdig; iRozhlas, in Czech]

The future robot apocalypse

The EA movement was more or less taken over by the “rationalists” — the subculture of the LessWrong website, founded by influential Artificial Intelligence crank Eliezer Yudkowsky. It was also Yudkowsky who came up with the name “effective altruism” for the broader movement.

Yudkowsky and his acolytes believe there is no greater threat to humanity than a rogue artificial super-intelligence taking over the world and treating humans as mere raw materials. They believe this apocalypse is imminent. To avert it, you must give them all the money you have. This is the most effective possible altruism.

Bankman-Fried and Ellison are active participants in the rationalist subculture, as are many Ethereum people. This makes the subject annoyingly on-topic for this blog.

EA formed as multiple disparate movements in a trenchcoat. The other parts were more focused on making ordinary real-world charity more effective per dollar. But the AI crank part keeps doing the organisational heavy lifting, which made it hard to laugh their dumb ideas out of the room — even as they were an embarrassment to the other sectors who try to do practical things. [Vox, 2015]

What does LessWrong do about the risk of rogue AIs? Yudkowsky runs the Machine Intelligence Research Institute (MIRI), which seems to be a place that collects mathematicians and sets them to work doing very little indeed. MIRI publishes an occasional paper on arXiv or a conference proceeding. The Make-A-Wish Foundation is literally a more effective charity than MIRI.

MIRI was substantially funded by Peter Thiel up to 2016. The largest individual donor in recent years has been Vitalik Buterin, the founder of Ethereum. Ben Delo from BitMEX is also a huge fan. [MIRI]

Like the rest of EA, the LessWrong subculture goes out of its way to worship wealth. Yudkowsky’s mind was blown by meeting the Sparkly Elites: “These CEOs and CTOs and hedge-fund traders, these folk of the mid-level power elite, seemed happier and more alive.” My dude, have you never heard of cocaine. Yudkowsky posted this piece just before the elites in question crashed the world economy. Whoops! [LessWrong, 2008]

LessWrong’s ideas and philosophy are frankly bizarre. I won’t attempt to explain them here. I wrote most of the RationalWiki article on the Roko’s basilisk thought experiment — about how the good future AI will, regrettably, be morally required to torture a simulation of you if you didn’t contribute enough money to bringing it into existence. The article sets out all the things you need to believe before this concept even makes any sense.

Elizabeth Sandifer — author of Neoreaction a Basilisk (UK, US), and the person responsible for me starting Attack of the 50 Foot Blockchain — and I also did a podcast with I Don’t Speak German in 2021 about LessWrong and the related Slate Star Codex blog by Scott Alexander. [Libsyn]

FTX: I’m one of you, would I ever steer you wrong?

The relationship between FTX and Effective Altruism is most usefully viewed as affinity fraud — where the con man tells the suckers “trust me, I’m one of you” before skinning them. The hard-working and dedicated people in EA trying to make the world a better place — despite the behaviour of the ones running the show — were cheated as badly by Sam Bankman-Fried as any FTX creditor was.

Bankman-Fried and Ellison put a lot of effort into promoting crypto, and especially FTX, to the EA crowd. One EA noted in 2021 that “the current EA portfolio is highly tilted towards Facebook and FTX.” [Effective Altruism Forum; Twitter]

EAs were largely horrified by how crooked Bankman-Fried and FTX turned out to be. FTX was a substantial and wide-ranging donor, and there’s a pile of programmes that had their funding abruptly cut to zero. And just wait until John Jay Ray III comes calling to claw back charitable donations in the FTX bankruptcy — especially if the donations are found to have been of stolen money.

The UK Centre For Effective Altruism’s board of trustees reviewed allegations that Bankman-Fried was engaging in unethical business practices as far back as 2018, when he just had Alameda — but ultimately took no action. [Semafor, 2022]

Recovering ex-EA Émile Torres has posted a list of very weird “EA” organisations that FTX gave money to. One was Lightcone Infrastructure, who are convinced they will save humanity by writing and hosting LessWrong’s forum software — really, that’s the activity they’re describing in the following text: [Lightcone]

This century is critical for humanity. We build tech, infrastructure, and community to navigate it. Humanity’s future could be vast, spanning billions of flourishing galaxies, reaching far into our future light cone. However, it seems humanity might never achieve this; we might not even survive the century. To increase our odds, we build services and infrastructure for people who are helping humanity navigate this crucial period.

Others include pronatalism, a movement that wants women in rich countries to get more into being baby machines, and is only coincidentally full of eugenicists and “great replacement” (of white people) conspiracy theorists. [Twitter; International Policy Digest; Business Insider]

The UK Charity Commission is inquiring into the Effective Ventures Foundation, which was substantially funded by FTX. [UK Government]

The EA movement is occasionally capable of introspection. The Effective Altruism Forum looks into the Protect Our Future PAC, the main public vehicle for Sam Bankman-Fried’s political donations. This PAC claimed to be about pandemic prevention and “longtermism” — the future welfare of 1054 hypothetical emulated humans, weighted much more heavily in their calculations than mere real-world humans suffering in the present — but what the PAC actually did was to spend its money trying to parachute pro-crypto candidates into safe Democratic seats. [Effective Altruism Forum]

“Doing bad to do good” surely won’t become just an excuse to do the bad thing for money

Every atrocity in history was justified as being for the greater good. Fortunately, EAs, like crypto guys, don’t bother with deprecated legacy nonsense like reading books — it’s much more Effective to reason things out from first principles. “Philosophy goes through self-conscious, periodic bouts of historical forgetting.” [Crooked Timber]

EA isn’t necessarily a bad idea. The realisation of it has some worrying bits, though. There’s hardly a good idea that you can’t turn into a bad idea if you just do it hard enough.

“like i’m sorry but if you have convinced yourself that you need to devote your life to enabling 10^54 simulated consciousnesses to come into being, you are in a cult and you need to stop and take stock of the life choices that brought you to this point” — Бди!

doing ineffective altruism. donating a yacht to a five-year-old with leukemia. dropping bitcoins from a helicopter over remote Alaskan native villages. $7 billion to the Connecticut Trolley Museum

— The Goddamned Penguin (@who_shot_jgr) November 26, 2022

NOTE: Comments are moderated to screen out foolishness. You have plenty of other places to exercise your freedom of speech.

Your subscriptions keep this site going. Sign up today!

Buying somewhere that Joachim von Ribbentrop stayed is on message for EA too.

I wonder if they have fights over who gets to sleep in the room he used?

I’m quite certain it’s entirely coincidence.

I wouldn’t be surprised if none of them knew who Joachim von Ribbentrop was. If it was somewhere Hitler once stayed at, or a Nazi leader’s well-known vacation home, as with Madison Cawthorn, it would be harder to chalk it up as a coincidence.

Yeah, no-one’s heard of a Nazi infamous enough to be one of the three campaigning in the Monty Python’s North Minehead By-election sketch as Ron Vibbentrop, alongside that nice Mr Hilter and Heimlich Bimmler.

Hilarious.

My 10^54 human emulation reports that Roko’s basilisk dies a horrible death in the fabulous future because, like Marvin the paranoid android, nobody bothers to replace one of his diodes. We apologize for the inconvenience.

You wrote the Roko’s basilisk Rationalwiki article! I found the site before I even followed crypto and loved that one. It was only after watching Line Goes Up that I became a r/buttcoin addict and starting reading your blog. I always felt like your style felt familiar. That sass, that discontent for the dishonest. No one can do it quite like you.

Because every story about Eliezer Yudkowsky requires it, I will note that prior to becoming the techbros’ pet philosopher his primary claim to fame wasn’t any sort of practical or theoretical work on AI or compsci in general, it was the Harry Potter fanfic he wrote to promote his totally-not-a-cult research institute.

which is on-topic for here, cos Caroline Ellison is a HUGE fan of it

Wow, I first know “LessWrong” because of a fanfiction they wrote: “Harry Potter and the Methods of Rationality”, never realise it’s all connected, and its author is just as piece of s–t as the author of OG Harry Potter.